FastAI way of learning is based on Making Learning Whole whose method can be summarized as:

- Play the whole game

- Make the game worth playing(Reward function)

- Work on the hard parts(refine yourselves by working on your weaknesses)

Some warnings by Jeremy:

- Save Google Colab work(it does not do it automatically)

- Don't forget to shut down your instance, else you will end up paying for it.

Points to remember:

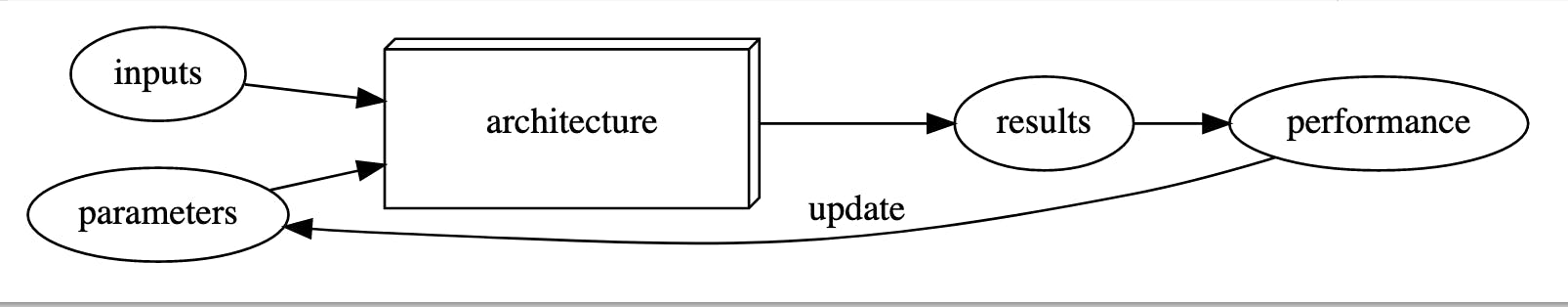

- Neural Network is a universal approximation function that can solve any set of tasks, provided appropriate set of weights it can solve any given task.

Architecture + Parameters == Models

Things to remember when making decisions based on model outputs:

The output is simply a prediction it is not a recommendation.

Positive feedback loop: While building a prediction pipeline make sure you understand the components in actions. Often models ends up in a positive feedback loop which isn't helpful for solving our intended problems.

- Upon writing an imported function in a shell and running it we can get the source of that function as shown below:

doc(function_name)It is super convenient way to find of documentation of a function and also contain link to official documentation post

Code Explanation of example model trained in lesson 1

from fastai2.vision.all import *

Generally importing any library with

*is a bad idea since, in python, it not only imports the mentioned library and its dependencies and their dependencies and so on, which can lead to some namespace conflicts with functions of the same name from different libraries, however infastailibrary is designed such that on importing using*we only get functions from that particular module(here: it isfastai2.vision.all) not their dependencies. Also note that although in the videos it is mentionedfastai2.vision.allas base library when using current version of fastai library we can usefastai.vision.all

path = untar_data(URLs.PETS)/'images'

This function will download a given dataset and extract it, also if it is already downloaded or extracted in the first place it will not repeat the respective process again. FastAI has predefined access to a number of data sets, for instance,

URLs.PETShere point to downloading the Cats vs. Dogs image data set. Hereuntar_datareturns the Posix path of where the data is decompressed to.

def is_cat(x): return x[0].isupper()

dls = ImageDataLoaders.from_name_func(

path, get_image_files(path), valid_pct=0.2, seed=42, label_func = is_cat,

item_tfms = Resize(224))

Next step is to tell fastai what this data is, and this case using

ImageDataLoaderstells us that these are images. It contains images that are present at the location mentioned bypath.get_image_filescontains a list of POSIX file paths for every image in the dataset .label_funcbasically explains for each file whether it is a cat or dog. So for instance here we tell the label function to distinguish between cats and dogs. Here, it uses theis_catfunction which basically returns True if the first letter of the file being analyzed is capital. Thisis_catfunction uses the property of the dataset which used the capital letter as the first alphabet for images containing images of cats.valid_pctreserves 20%(as mentioned above) of the data(called validation set) and does not use for training purposes, instead it uses it to calculatingerror_rate(which helps in understanding how model actually performs on unseen data), motivation of doing so is to ensure that we are not overfitting. Overfitting happens for various reasons such as using models that are really big, or using not enough data. (TODO for next lecture: Find out the use ofpathargument, ifget_image_filesalready provides list of dataset files)

learner = cnn_learner(dls, resnet34, metrics=error_rate)

Here

cnn_learneris a learner which basically does the training, we need to tell it what data to use, then what architecture to use (here we are using resnet34), finally we tell the learner what thing should we print out as it is training, for instance here we are printing the error

learner.fine_tune(1)

fine_tunemethod basically does the training

Question: I had some confusion about what are the roles of cnn_learner and fine_tune since they both seem to be participating in training the model

Answer: cnn_learner basically instantiates a Learner class with parameters such as architecture, data loader, metric for evaluation(here it is: error_rate), whereas; fine_tune is used to perform 1 epoch(change of weights in NN from first layer to last layer and then to first layer again) of training. However, upon performing fine_tune step we see two tables even though we just initiated only one. Please check this awesome explanation about why it does so.

TODO before the next lesson:

- ☑ Setup GPU

- ☑ Run the code of lesson 1 ipynb

- ☑ Use documentation to gain insight into underlying working

- ☑ Basically, get comfortable with exploring fastai library/docs

- ☑ Important aspect of following a Top-Down approach to learning is experimenting and making sure we can run the code.

- ☑ Go through chapter 1 in the fastbook

- ☐ Personal Goal: Just like Cats vs Dogs dataset, try to run the code on Hot Dog vs Not Hot Dog classification